SHORT NEWS

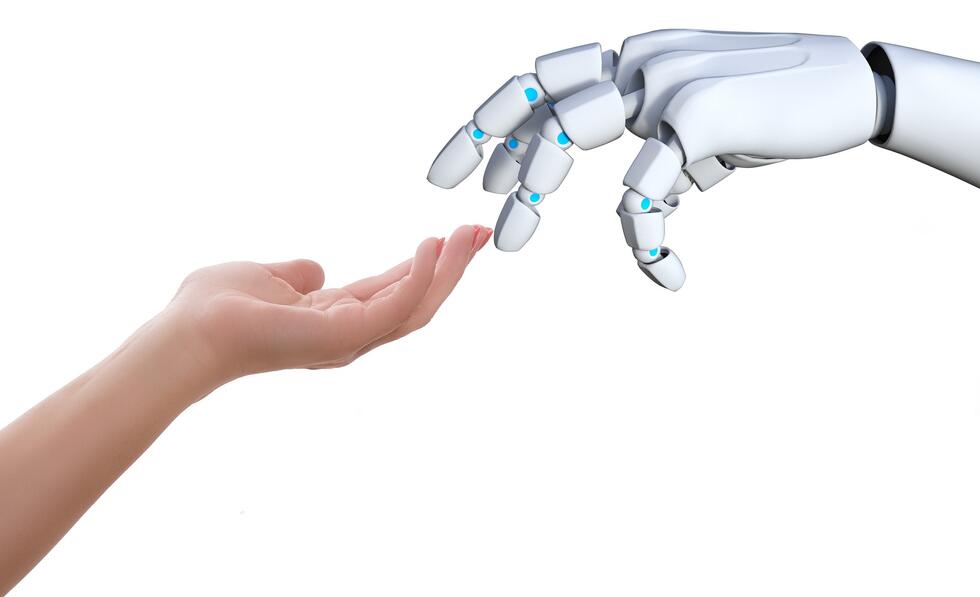

Swiss researchers decode AI thought processes

Researchers have developed a new method for decoding the thinking of artificial intelligences. This is particularly important for situations in which self-learning computer programmes make decisions with implications for human lives.

"The way these algorithms work is opaque, to say the least," study leader Christian Lovis of the University of Geneva (Unige) said in a statement. "How can we trust a machine without understanding the basis of its thinking?" So an international research team led by the University of Geneva has been studying just that.

Artificial intelligences (AIs) are trained with large amounts of data. Like a toddler learning its native language without being aware of the grammar rules, AI algorithms can learn to make the right choices by matching a lot of input data on their own.

However, how the algorithms of these self-learning computers represent and process their input data internally is a "black box". To shed light on this, there are so-called interpretation methods. They show which data and factors are decisive for the AI's decisions.

Which approach is right?

In recent years, various such methods have been developed. However, these interpretability methods often lead to different results, which raises the question of which approach is the right one. Choosing an interpretability approach from the multitude of available approaches for a particular purpose can therefore be difficult, as noted in the study.

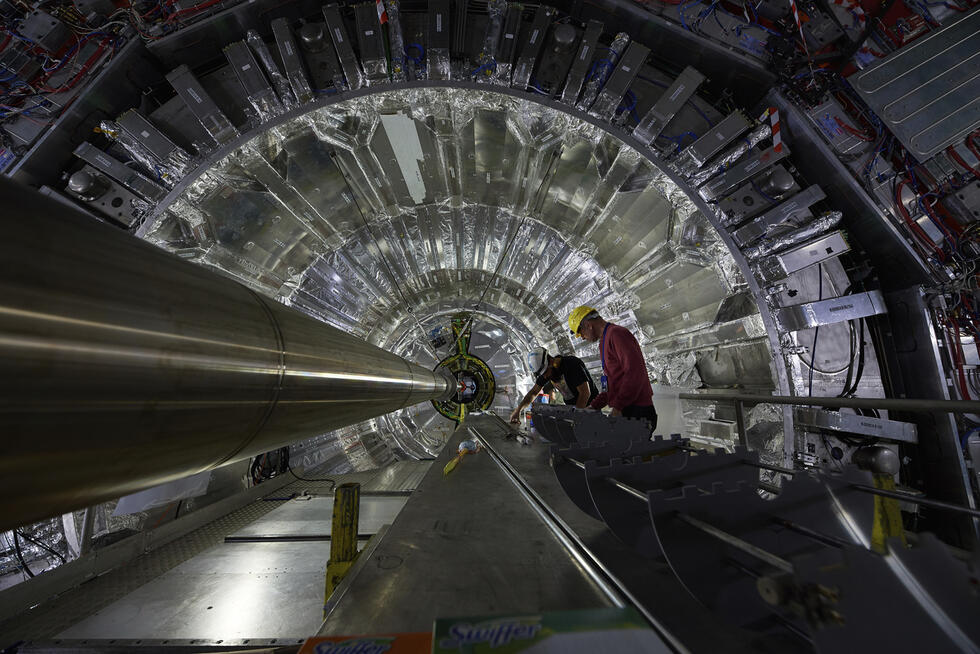

The new method developed by researchers from the University of Geneva, the University Hospital of Geneva (HUG) and the National University of Singapore (NUS) can test the reliability of different such methods. The results were recently published in the journal Nature Machine Intelligence.