AI prodigy Shalev Lifshitz: “What values should we teach AI?”

Shalev Lifshitz is the world’s youngest AI researcher. In this interview he explains how conflicts between humankind and machines can be solved.

Hesitantly, almost shyly, Shalev Lifshitz enters the hotel lobby in central Berlin. The teenager is one of the most sought-after researchers on artificial intelligence (AI). Much is new to him here: It’s his first time in Berlin, his first ever trip to Europe even. Shalev Lifshitz has been invited here to give a keynote speech on the opportunities and risks of AI at the Internationale Funkausstellung (IFA). Both his parents have accompanied him to Europe, and he has brought his mother along to our interview. She is visibly proud of her son, and an important moral support – particularly as our interview gets underway. But as soon as we start talking about AI, Shalev Lifshitz’s shyness disappears. His voice grows louder, his posture more upright, and ideas and thoughts start pouring out of him. It is clear: Artificial intelligence is his passion. This passion is what makes the “normal” teenager such a sought-after interview partner.

Generally speaking, is artificial intelligence good or bad?

Shalev Lifshitz: The term “artificial intelligence” encompasses many different technologies. And like any technology, AI is also inherently neutral. It’s up to us humans to decide whether we use it for good or evil.

What are the prime opportunities for this technology?

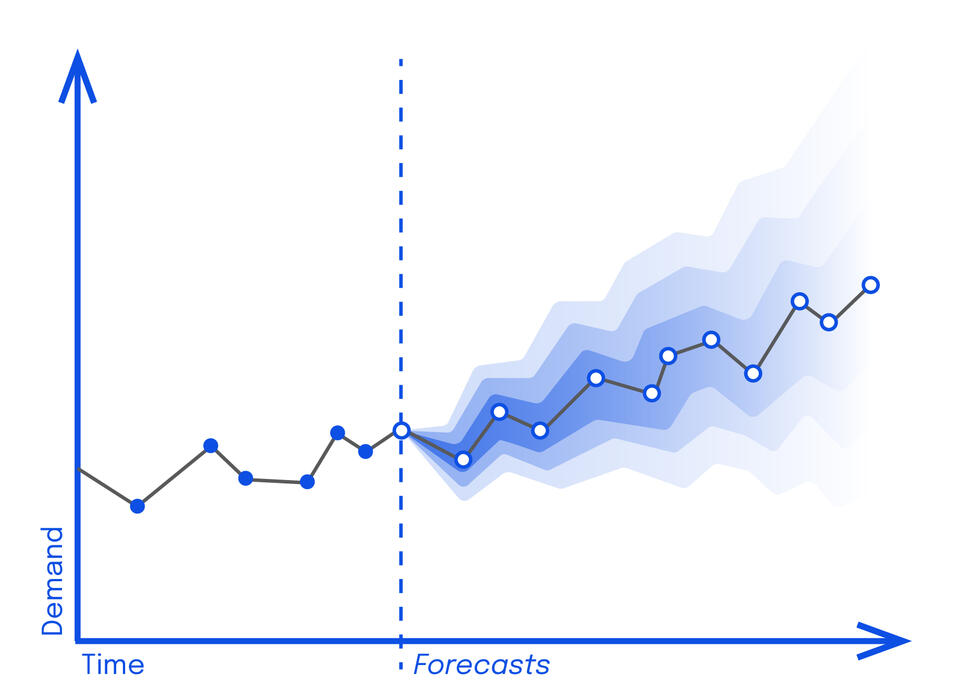

One of the most important fields for AI is machine learning: the ability to recognize patterns in data. While we humans can distinguish and understand only very few variables in such patterns, a computer can recognize millions of variables in multiple dimensions and understand how they are correlated. And AI can help computers recognize many more and far more complex patterns than humans ever could.

Does this mean that AI will gradually displace humankind?

No. AI is very well suited for fast, repetitive tasks that take humans a great deal of time. We, in contrast, are skilled at solving complex problems involving factors that cannot be predicted or calculated. We are creative and develop new ideas. This is something AI is not so good at. Consequently, AI is capable of taking over repetitive tasks and performing them faster, more efficiently, and more accurately than we could. But there are limits. Humans, in contrast, can perform a wide variety of tasks because our brain is an incredibly universal system. AI lacks this ability. For this, we would have to develop a general AI system. But that is still in the distant future.

Back to the present. Where do the risks lie?

Nowadays, every AI we develop requires an objective. We have the power to control this objective. The risks lie in the areas that we cannot control, such as subordinate objectives, in other words intermediate goals that AI sets itself in order to fulfill the task for which it was designed.

This sounds like a standard “step-by-step” approach. So what makes this so risky?

The danger is that these intermediate objectives are not planned by us and that they could have negative consequences.

Can you illustrate this using an example?

With pleasure! Let’s say I assign my AI system the task of improving the living conditions for humankind on earth. This is its prime objective. Along the way, the AI develops its own intermediate goals over which I have no control. Such an intermediate goal could be that the AI annihilates half of humankind in order to improve the living conditions for the remainder. The AI simply sees this as an intermediate step on the path towards achieving the prime objective of improving our living conditions – but certainly not in the manner we intended. To take it one step further: If at some point AI is to set its main objectives itself, it is feasible that these may also not correspond to our expectations and requirements. But in the event of conflicting objectives, only one can ultimately prevail.

So it seems that it will boil down to a conflict between AI and humankind. How can we prevent this?

We have to reconcile the goals of AI and humankind. For example, we could code human values into computers. However, this would entail two problems: From a technical point of view, we do not yet know how to teach machines values that cannot be hacked. And from a political perspective, the question arises as to which values we should choose. Different cultures around the globe have different values. Which ones should we program into the AI?

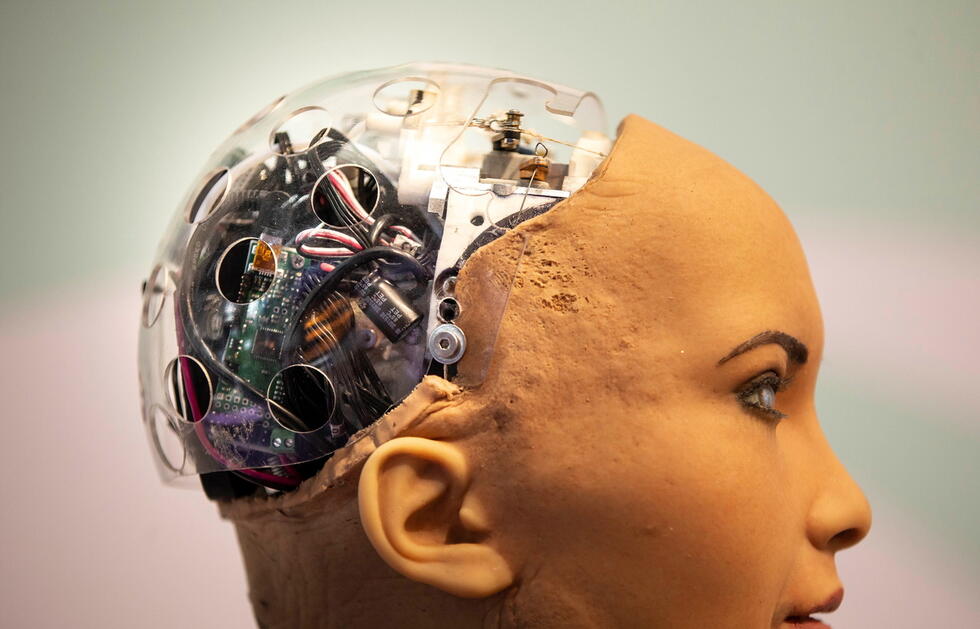

Working on AI means addressing existential questions. This also applies to researchers who experiment with the merging of humans and technology…

Undoubtedly. And the creation of a progressive organism is another way of preventing conflicts of interest. By merging humans and technology, we create common objectives. In addition, this would allow machines and humans to exploit each other’s skills. Humans would become a mix of machine and human intelligence.

This sounds very much like science fiction!

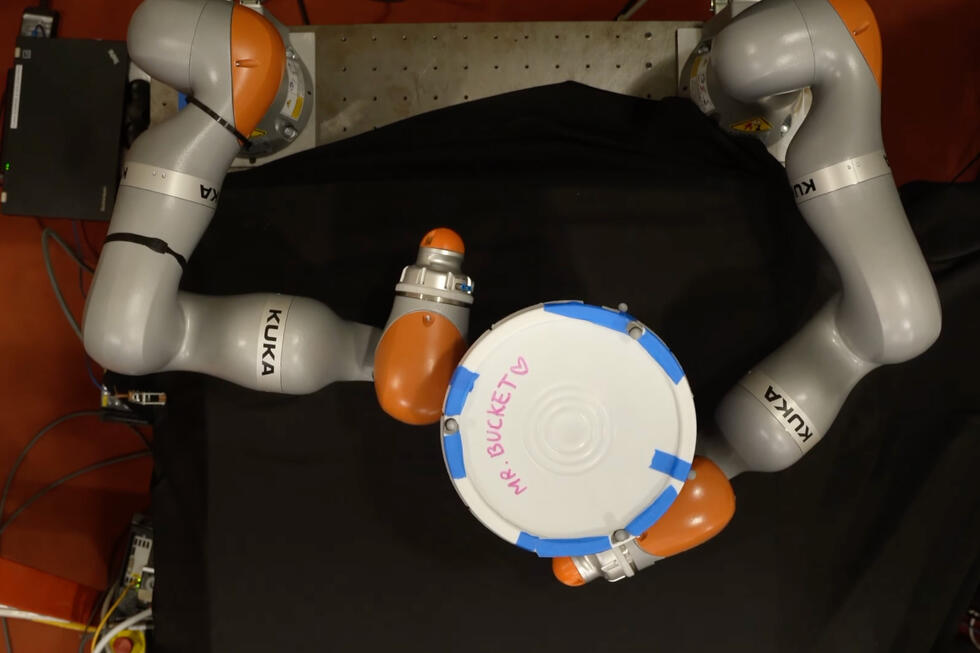

Yes, this convergence still lies in the distant future, but some technical components that will make this possible already exist, such as what are known as brain-computer interfaces (BCIs). BCIs record brain signals and transmit them to machines, for example, in order to move a computer cursor using just the power of thought or to dictate sentences simply by silently moving one’s jawbone. BCI technology is the key to merging humans and machines.

Are there even moral boundaries? What legal regulations are necessary?

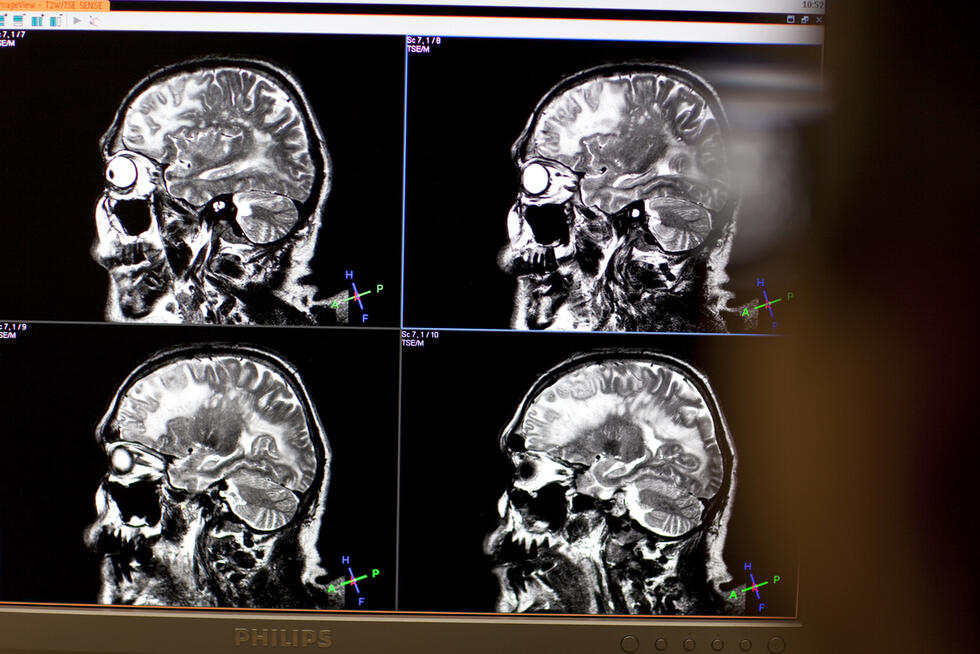

The accumulation of data is a big issue, because AI is based on data. When AI diagnoses medical conditions in hospitals, it is based, for example, on brain scans. This is the necessary data – and the patients are aware of this. But data is being collected virtually everywhere, also outside of hospitals, often without us being consciously aware of it. In the future, BCIs could collect data directly from our brains and thoughts. But do we really want this to happen?

I rather doubt it.

It depends. But this example clearly shows that we should already be deliberating today on how we want to regulate the possibilities of the future. We should not wait until it is already a reality. It is important that our decisions take account of the consequences for the future. Our decisions today will have a profound impact on future developments.

What do we have to do to be able make these decisions in a meaningful manner?

Everybody should think about this topic, not just AI researchers or politicians. We have to ensure that the future development of AI will be a positive one. If we develop the technology aimlessly, some decisions could have consequences that are not really intended. This is why it is important to seize the opportunities of AI and anticipate and avoid the risks.

About the person

Shalev Lifshitz lives in Toronto. He is 17 and is currently one of the most sought-after researchers in the field of artificial intelligence (AI). At home in Canada, he is currently working on three projects: At the SickKids Hospital in Toronto, he is developing a computer system to help physicians diagnose ciliopathy using microscopic cell images; at the St. Joseph Hospital in Hamilton, near Toronto, he is designing a digital system to record the progression of the disease and the treatment of patients at home in order to facilitate their treatment; and at the University of Waterloo, he is researching new AI methods for cancer diagnosis. In addition, he attends regular high school.

Written by: Michael Radunski

Illustration: Joel Kimmel