Identifying technology risks in time

Every new technology potentially also entails problematic side effects. The technology assessor Armin Grunwald helps identify risks in good time. In this interview, he talks about the consequences of emotionally competent AI. And he explains which new technology will have the greatest impact on our lives.

What technology in particular are you currently focusing on?

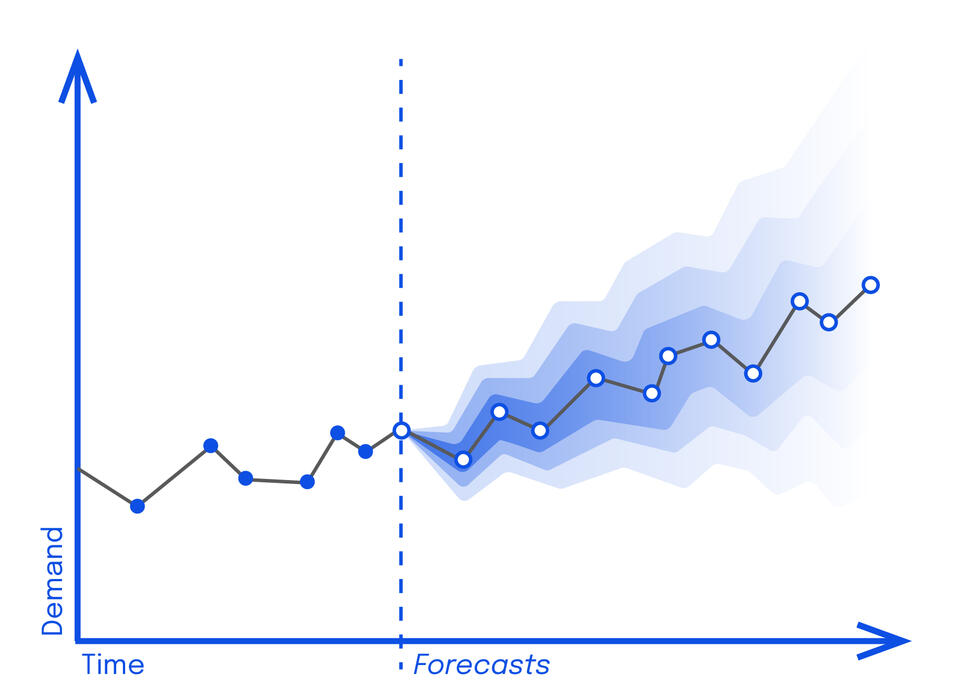

Armin Grunwald: I am currently dealing intensively with the digital dependency of our entire infrastructure, and within the framework of my work for the German Bundestag, in particular with regard to water supply. What would happen in the event of a large-scale hacker attack? The other issue that occupies a great deal of my time is ADM: automated decision-making technologies.

In other words, artificial intelligence that makes decisions for us.

Correct. Self-driving cars, for example, do this all the time. But the question is: Who bears the responsibility if something goes wrong? The programmer? An ethics panel? Such questions are certainly not new, but they are controversial.

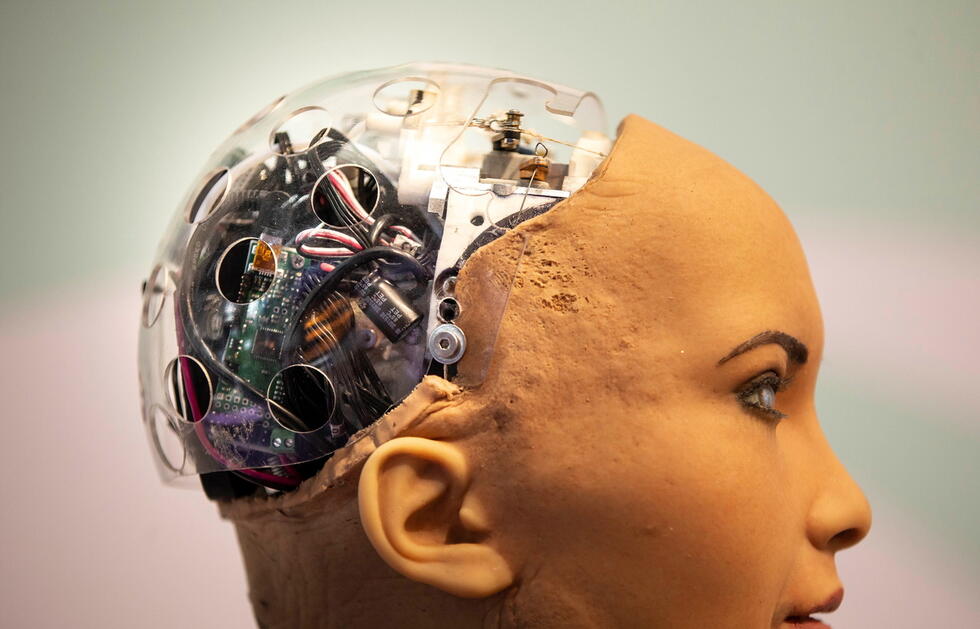

A major research topic in the field of AI concerns systems that recognize or simulate human emotions. What are the possible implications of this technology?

Algorithms that detect our emotions run a very clear risk of violating personal privacy rights or even human rights. The manipulation of our shopping behavior or the disregard for privacy by means of facial recognition are just the beginning.

Emotion recognition also offers tremendous opportunities. Learn more here: AI with EQ

Where does it end?

Admitting AI-based lie detectors in a court of law, for example, would be fatal. The systems could make unfair decisions or malfunction in other ways, leading to real-life legal consequences. Another fatal risk is the endangerment of freedom of thought.

How could this happen?

Emotional AI is not yet capable of looking into people’s minds. It merely recognizes the emotions shown, which, to a certain extent, we can control. For instance, we can simply feign joy. But this would change if one day AI were able to read our minds.

And how reliable do you believe current emotion recognition systems are?

As I said: AI still only perceives the emotions we show. It cannot yet determine whether an emotion is genuine. However: Even we humans don’t always interpret the emotions of our fellow human beings correctly. When it comes to the emotions of machines, however, we are likely to encounter considerably greater problems.

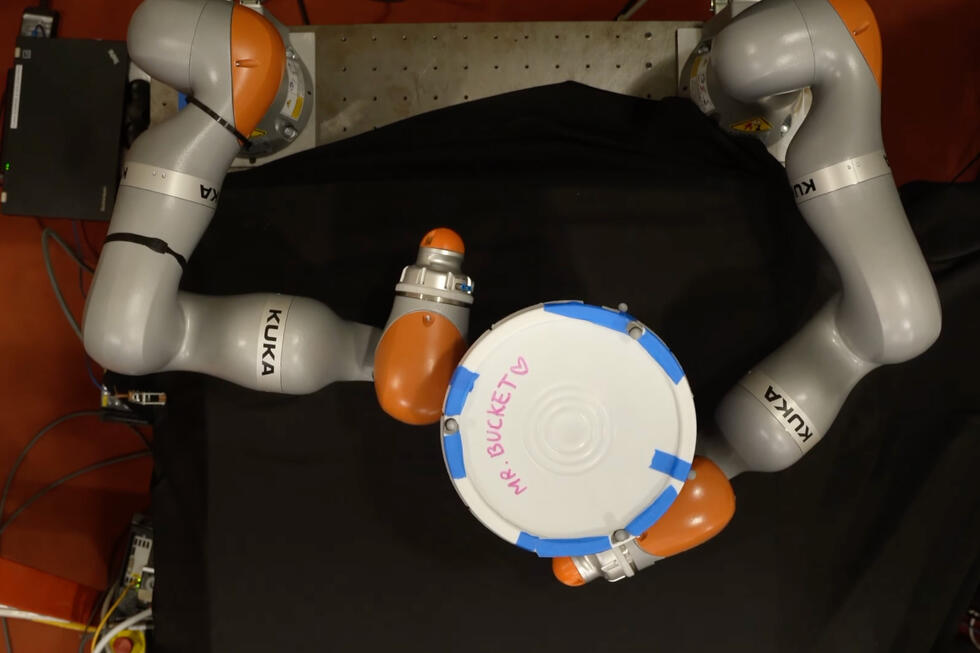

You mean with robots that simulate emotional intimacy?

Precisely. Imagine a highly charismatic AI ending up in the wrong hands and being used to deceive us. With a human, we might be able to unmask them by means of telltale signals. A robot may be able to hide such signals perfectly, or may not have them at all.

"However, people suffering from dementia or even extremely lonely people might mistake the emotions for real or at least anthropomorphize the systems."

But at least we know: Its emotions are not real.

Certainly, most of us are aware that the emotions are merely programmed. However, people suffering from dementia or even extremely lonely people might mistake the emotions for real or at least anthropomorphize the systems.

What problems might this raise?

For example, it is a key ethical question whether we should use Pepper Robots, which perceive emotions and respond to them in an appropriate way, in dementia wards. From the point of view of the ethics of utility, this may well be legitimate, because it helps everyone: the patient and the system, which is faced with a shortage of qualified healthcare workers. From the point of view of human dignity, on the other hand, fobbing off those who suffer from dementia with robots is problematic. They have a right to human companionship.

Could one say that society is shirking its responsibilities and leaving the matter to robots?

This is a real danger. It’s a familiar mechanism: Whenever there is a problem, society clamors for a technological solution instead of rethinking its own behavior.

Finally, on a more general note: Which technology will have the most transformative impact on our lives?

Needless to say, digital technologies are far from being fully exploited. Nevertheless, it will not be developments in individual fields such as robotics, digitally assisted biotechnology, or AI that will bring about the greatest changes.

But rather?

We have not yet really grasped what digitalization as a societal process means. Our entire social structure and even the human self-image are being called into question. This is why we should at long last take a proactive approach. For example, we urgently need to start regulating new technologies of large corporations at an early stage.

The EU intends to define global standards

In the spring of 2021, the EU submitted a draft ordinance, which is intended to legally regulate AI for the first time. The draft foresees binding requirements for technologies that could violate basic liberties. Currently, however, with regard to emotion recognition systems, the draft legislation only provides for transparency obligations.

Learn more: New rules for artificial intelligence – questions and answers (europa.eu)

Personal details

Armin Grunwald (62) is a physicist and professor of the philosophy of technology at the Karlsruhe Institute of Technology. He also heads the German Bundestag’s Office of Technology Assessment.

Written by:

Illustration: Reza Bassiri